I have recently created a secondary and more specialised blog called ‘Semantic Integration Therapy‘ given my focus is now beginning to shift to this particular discipline. Semantic Integration in my terminology and context relates to achieving more effective application integration and SOA solutions by extending the traditional integration contract (XSD and informal documentation) with a more sementically aware mechanism. As such I’m now deep-diving into the use of XMLSchema pluse Schematron, RDF, OWL and commercial tooling such as Progress DXSI. There is a heavy overlap with the Semantic Web Community, but my focus is moreso on the transactional integration space within the Enterprise, as opposed to the holistic principles of the third generation or semantic web.

Where a Semantic Contract Fits…

October 10, 2008I’ve been posting about the rise of the informal semantic contract relating to web-services and the deficiencies of XML Schema in adequately communicating the capability of anything other than a trivial service. Formalising a semantic contract by enriching a baseline structural contact (WSDL/XSD) with semantic or content-based constraints, effectively creates a smaller window of well-formedness, through which a consumer must navigate the well-formedness of their payload in issuing a request. Other factors such as incremental implementation of a complex business service ‘behind’ the generalised service interface compound the need for a semantic contract.

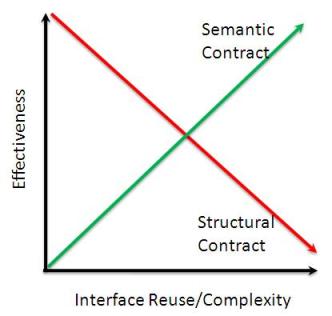

To clarify the relationship between structural and semantic, I happened upon a great picture which I’ve annotated…

The Rise of the Semantic Contract

October 9, 2008This is my stake in the ground for now. SOA in the market-place places total emphasis on 2 things. Web Services as a basis for communication and Re-use as a basis for convincing the boss to put some cash into your middleware bunker..no…play-pen…err…seat of learning.

In addition the militant splinter-groups of the new-wave of RESTafarians (of whom I am an empathising skeptic on this specific 🙂 point about service specification) call for the death of WSDL and the reliance on (WSDL-lite) WADL in the case of the less extremist, but plain-old inference from sample instance documents in the case of the hard-core….

I am finding myself sailing down the no-mans land between these two polarised viewpoints, and see the need for specification in the more complex end of the interface spectrum, but similarly don’t see how specifications help when decoding the specification is harder than inferring from samples when interface contracts are relatively intuitive. So there we have a basic mental picture of my map of the universe.

Now I’m getting to the point.

I’m now convinced that SOA’s push for re-use established through WSDL everywhere, but equally the more recent RESTafarian voices relating to unspecification both have flaws when we are attempting to open up a generalised interface into a service endpoint capable of dealing with a range of entity variants (say product types for example).

My view here is that in the SOA landscape, the static and limited semantic capability of XMLSchema, and in the RESTian lanscape, the inability of humans to infer correctness without a large number of complex instance document snapshots, leads me to the conclusion that there is a vast, yawning, gaping, chasm of understanding in what constitutes an effective contract in locking down the permissible value permutations – aka the semantic contract.

I’ve seen MS-Word. I’ve seen MS-Excel. I’ve seen bleeding-eyes-on-5-hour-conference-calls-relating-to-who-means-what-when-we-say-customer, and best of all I’ve seen hardwired logic constructed in stove-pipes behind re-usable interfaces, aka lipstick on the pig.

I reckon the semantic contract – the contract locking down the permissible instances is far more important than the outer structural contract who’s value decays as the level of re-use and inherent complexity of the interface increases. In addition there are likely to be multiple iterations/increments of a semantic contract within the context of a structural contract as service functionality is incremented over successive iterations – adding product support incrementally to an ordering service of example. This leads to to the notion of the cable cross-section:

In the SOA context…WSDL drives tooling to abstract me from the structural contract. But the formation of the semantic contract as the expression of what the provider is willing to service via that re-usable and loose structural contract is the key to effective integration.

If we don’t pay this enough respect we’ll be using our system testing to mop-up the simple, avoidable instance-data related problems that could be easily avoided if we’d formalised the semantic contract earlier in the development lifecycle…

Powered by Qumana

Enterprise SOA, Continuous Integration and DXSI

September 5, 2008 Creating an approach to CI’ing large scale enterprise SOA initiatives has unearthed a potentially significant efficiency gain in the semantic layer. Semantics relate to instance data – and specifically in the context of re-usable, extensible service interfaces the semantic challenge eclipses that of achieving syntactical alignment between consumer and provider.

Creating an approach to CI’ing large scale enterprise SOA initiatives has unearthed a potentially significant efficiency gain in the semantic layer. Semantics relate to instance data – and specifically in the context of re-usable, extensible service interfaces the semantic challenge eclipses that of achieving syntactical alignment between consumer and provider.

Evidence shows that the vast proportion of integration failures picked up in testing environments (having taken the hit to mobilise a complex deployment of a range of components) are related to data/semantics, not syntax.

As such I’ve been focusing on how to front-end the verification of a consumer ‘understanding’ the provider structurally and semantically from day 1 of the design process. The CI framework I’m putting together makes use of a traditional set of artifact presence/quality assessment, but significantly introduces the concept of the Semantic Mock (SMOCK) – which is an executable component based on the service contract with the addition of a set of evolving semantic expressions and constraints.

This SMOCKartifact allows a service provider to incrementally evolve the detail of the SMOCK whilst having the CI framework automatically acquiring consumer artifacts such as static instance docs or dynamic harnesses (both manifesting earlier in the delivery process than the final service implementation (and I mean on day 1 or 2 of a 90 day cycle as opposed to being identified through fall-out in formal test-environments or worse than that – in production environments for example).

Over time as both consumer and provider evolve through and beyond the SMOCK phase, the level of confidence in design integrity is exponentially improved – simply based on the fact that we’ve had continuous automated verification (and hence integration) of consumer and provider ‘contractal bindings’ for weeks or months. This ultimately leads to a more effective use of formal testing resource and time in adding value as opposed to fire-fighting and kicking back avoidable broken interfaces.

The tool I’m using to protoype ths SMOCK is Progress DXSI. This semantic integration capability occupies a significant niche by focusing on the semantic or data contract associated with all but the most trivial service interfaces. DXSI allows a provider domain-expert to enrich base artifacts (WSDL/XSD) and export runnable SMOCK components which can then be automatically acquired, hosted and exercised (by my CI environment) to verify consumer artifacts published by prospective consumers of the service. Best of all kicking back compliance reports based on the semantic constraints being exercised in each ‘test case’ such that my ‘CI Build Report’ includes a definition of why ‘your’ understanding of ‘my’ semantic contract is flawed…

Beyond SMOCK verification – DXSI also allows me to make a seamless transition into a production runtime too but that’s another story…

Powered by Qumana

This Week’s Micro-Obsession

July 21, 2008July + Sunshine + Arctic Wind =>

Geek + Wifi + (Geek Fuel == Books) + Garden =

Green Spiky Mental Energy

Having spent some months working in an application centric continuous integration and test-oriented developement project I am now wondering how I ever survived without it? The mental loading you have to bear if you manage the ‘virtual’ CI in your head is a pretty heavy price and detracts from the creativity at the sharp end as a result of mental-resource-contenton. Having experienced the CI model for the first time, I’m a complete convert and have been amazed how simple it is to create a very effective CI process out of public-domain tools…

Having spent some months working in an application centric continuous integration and test-oriented developement project I am now wondering how I ever survived without it? The mental loading you have to bear if you manage the ‘virtual’ CI in your head is a pretty heavy price and detracts from the creativity at the sharp end as a result of mental-resource-contenton. Having experienced the CI model for the first time, I’m a complete convert and have been amazed how simple it is to create a very effective CI process out of public-domain tools…

Now exploring if the same automation can actually be exploted to the same extent, with the same degree of certainty being introduced into large scale integration projects through a synergy of SOA and Contract-First design philosophy, Cross Domain Test Driven Development where I write tests against your contract, Continuous Integration meaning explicit verification of tests against contracts and implementations of contracts. This takes the concept I’ve been hearing about – that associated with ‘integration of components’ witin an application or system, and expands it out to sit across numerous application / system engineering projects to provide an overarchiing, verifiable build or ‘integraiton’ process triggered by modifications to key aspects of what defines the inter-domain contract.

Interesting challenge…

More later.

Powered by Qumana

Enterprise Integration: SOA “re-use” is a wolf ?

July 16, 2008 Is our focus on achieving Service re-use actually harming the work we’re doing in the creation of the SOA? Are we so focused on the re-use model that we’re damaging the implementation of the SOA blueprint? Let me try explain why I believe this is the case.

Is our focus on achieving Service re-use actually harming the work we’re doing in the creation of the SOA? Are we so focused on the re-use model that we’re damaging the implementation of the SOA blueprint? Let me try explain why I believe this is the case.

Re-use is a measure of what? In some cases it means how much common code is duplicated and leveraged in new software developments. In other cases it means the more literal measure of concurrent exploitation of a shared resource. In our SOA governance structure, re-use is definitely analagous to the latter case – the one-size-fits-all coarse grained, generic service that enables me to service all variants of any particular requirement through a single, common service interface. Breathe………..!

That’s great for the provider who can now hide behind the ‘re-usable’ facade, pointing knowingly at the SOA governance literature every time you try to have the conversation that his service requires a PhD in data-modelling and xml cryptography to use it?

“But it’s re-usable” comes the reply as you weep, holding out your outstretched arms, cradling the tangled reams of xml-embossed printer-paper representing the only ticket into the service you need to use to avoid being branded a heretic. “Are you challenging the SOA governance policy? Let me just make that call to the department of SOA Enforcement…err I mean the Chief Architect…what’s your name again?” comes the prompt follow-up as the hand reaches for the phone…

I’m now convinced that re-use is a proverbial wolf in sheep’s clothing. We must look more closely at the cost of creating and operating these ‘jack-of-all-trades’ services, not just the fact that we can create them. If it transpires that we’re incurring more cost (both financial and operational) at both the provider-side and the consumer-side, by virtue of aspiring to ‘re-use through generalisation’ then we have truly lost the plot. Could this be part of the reason that SOA ROI is such a difficult subject to discuss and often results in a “year-n payback” kind of response?

I also think there’s an analogy to make here. Take one of our local government services which are considered part of the service fabric of our community. If the services are made too generalised and therefore spawn highly complex forms and a high level of complex dialogue in face-to-face scenarios, who is that helping? Yes we can say that we’ve consolidated ‘n’ simpler services into a single generalised service and saved on office infrastructure and so forth. But if we then increase the cost of processing requests for that generalised service both in terms of ‘steps’ to get from the generalised input to the specific action (therefore requiring larger offices in which to house the longer queues) and also in terms of now having to cater for the increased and excessive fall-out volumes based on the fact that it’s just so damn complex to fill the forms in (therefore requiring even larger offices to in which to house the even longer queues)….then we really missed the point about re-use.

It makes complete sense to look to generalise and re-use in the SOA design-space, such that we can converge similar designs to a common reference model and avoid the unconstrained artistry of technicians with deadlines. In terms of service contracts and interface specifications through, translating that design-time re-use to a wire-exposed endpoint seems like we’re stopping short of the ultimate goal of accelerating re-use by empowering consumers to bond more efficiently with that service.

Powered by Qumana

Enterprise Integration: Don’t Look Down !

July 4, 2008 Can SOA truly be successful if service consumers have to be technology consumers? The service layer is supposed to insulate us from the technical complexities and dependencies of the enabling technology, but I see more and more the technology being the centre of attention.

Can SOA truly be successful if service consumers have to be technology consumers? The service layer is supposed to insulate us from the technical complexities and dependencies of the enabling technology, but I see more and more the technology being the centre of attention.

The promise of Web Serivces whilst standardising on the logical notion of integration, has embroiled us in a complexity relating not to the ‘act’ of exchanging documents, but instead relating to the diversity within the Enterprise, the various technologies and tools used across a widely disparate ecosystem, and debating the finer details of which interpretations of which standards we want to use.

More significantly the large deployed base of messaging software, and the service endpoints exposed to MQ or JMS endpoints are left out of the handle-cranking associated with the synchronous style of endpoint. As such – a SOA layering ‘consistency’ across such a diverse ecosystem is a myth in my experience. We’re still struggling to find the SOA ‘blue-touch-paper’ despite all of the top-down justification and policy.

I believe that until we push the technology further down towards the network such that it becomes irrelevant to the service consumer, and raise the service interaction higher up in terms of decoupling the ‘interaction’ from the ‘technology’ we are going to struggle to not only justify the benefit of service orientation, but more significantly we’ll continue to struggle to justify the inevitable rework and technical implications of that service orientation in mandating conformance to brittle and transient technical standards.

I’m going to explore an approach to doing this – by encapsulating middleware facilities as RESTian resources, and then looking at the bindings between WSDL generated stubs and these infrastructure resources…effectively removing technologies (apart from the obvious RESTian implications) from the invocation of a web-service. Various header indicators can flex QoS expectations in the service invocation (i.e. synch or asynch, timeouts, exception sinks etc) but that has no relationship to any given protocol or infrastructure type. Furthermore, the existence of such a set of ‘resource primitives’ would enable direct interaction where WSDL-based integration does not yet exist…where I resolve, send, receive and validate though direct interaction with RESTian services from any style of client-side application.

This is motivated purely by the belief that, much like the chap in the picture, our focus is on the endpoint and not what lies beneath…

Enterprise Integration: The Target Architecture

June 30, 2008 Refactoring architectural roadmaps. Where to start after time in the wilderness? Surveying the scale of the landscape, evolving mass of vendors, old and new initiatives and legacy sprawl. Getting that cold feeling in the pit of the stomach, like the one you get when the ‘flight’ option is removed from the ‘fight or flight’ juncture…

Refactoring architectural roadmaps. Where to start after time in the wilderness? Surveying the scale of the landscape, evolving mass of vendors, old and new initiatives and legacy sprawl. Getting that cold feeling in the pit of the stomach, like the one you get when the ‘flight’ option is removed from the ‘fight or flight’ juncture…

So how do you actually make headway and add real value to the enterprise?

My revised target architecture is summarised in the following image. We need keep the technology partners trolls under the bridges, and make sure our diverse users know where the bridges are, how wide they are, how much it costs to build one, what it costs to keep a bridge safe, and make them forget about the noises down below…

Phase 2 will entail sealing the foundations in concrete we mixed ourselves, and re-routing the rivers. Therefore no space for ‘noise’ from the space beneath…happy times.

I’m starting to see the light…

Powered by Qumana

Enterprise Integration : QoS is What not How…

June 30, 2008Looking at reasons why we adopt certain technical approaches to enterprise systems integration I’m increasingly concerned by a ‘how’ approach, using proprietary, expensive technology to create relatively simple styles of interaction effectively eclipsing the ‘what’. This ‘how’ approach appears to be rooted in technical policy and commercial structures rooted in the past, during which times proprietary/vendor solutions had more credibility than home-grown options. However looking at the ongoing commoditisation of application integration, the dominance of the web, and all manner of open-source technical options, it strikes me that we need to review our position, and attempt to find ways of selectively breaking out of the vicious circle of ‘licence renewal’ and ‘false economy’ in favour of a more blended approach.

Systems integration is dominated by TLA’s with a heavy vendor influence, and as such it’s easy to get lost in the cloud of complexity associated with MOM, JMS, MQ, XA, SSL, PKI, REST, HTTP, SYNCH, ASYNCH, PERSISTENT, QOS, etc. It’s no surprise, therefore that systems integration shoulders the burden of the difficult stuff getting brushed under the ‘proverbial carpet’ as opposed to the aspiration of the ‘intelligent application-aware network’. As that carpet gets ‘lumpier’, we throw more TLA’s into the mix and add more and more intelligence into the network, resulting in more complexit and a vicious circle. It’s no surprise that we’ve struggled to gain the confidence of the enterprise to the extent where we are in a position to move to commoditise what has become a very complex array of sticking plasters upon sticking plasters.

Systems integration is dominated by TLA’s with a heavy vendor influence, and as such it’s easy to get lost in the cloud of complexity associated with MOM, JMS, MQ, XA, SSL, PKI, REST, HTTP, SYNCH, ASYNCH, PERSISTENT, QOS, etc. It’s no surprise, therefore that systems integration shoulders the burden of the difficult stuff getting brushed under the ‘proverbial carpet’ as opposed to the aspiration of the ‘intelligent application-aware network’. As that carpet gets ‘lumpier’, we throw more TLA’s into the mix and add more and more intelligence into the network, resulting in more complexit and a vicious circle. It’s no surprise that we’ve struggled to gain the confidence of the enterprise to the extent where we are in a position to move to commoditise what has become a very complex array of sticking plasters upon sticking plasters.

Shifting the focus to ‘the web’ and my interactions across that global ‘unreliable’, diverse, evolving network. I note initially that I have a natural understanding of what I want to happen each time I select one or my many application’s. My mail client gives me the ability to create asynchronous 1:1 or 1:n distribution flows, with the ability of conveying large payloads and attachments. My instant messenger client allows me to enage in synchronous 1:1 or 1:n interactions, my feed-reader will sink event streams at a frequency I define. My blog client let’s me cache up a range of 1:n broadcast documents which are periodically published to a hosting platform. My twitter client lets me post informal event snippets. The list goes on.

The key point here is that I care not how any of my selected applications undertake my requested interaction, and in all honesty (even though I’ve implemented countless protocols in my time) I don’t have the time to care because if they are working ok, then I understand the QoS I expect, and if I don’t get that QoS I vote with my feet. We sometimes hear terms like Jabber, XMPP, SMTP, POP3, HTTP, HTTPS, IRC, etc. in assocation with the applications we use, but I would argue that only a tiny proportion of users of Thunderbird/Outlook actually have any clue about the implication of those SMTP settings at a technical level.

So to my conclusion. Systems integrators (and adopters of such technology in the enterprise) evolved from a time and a market-place differentiated by the ‘how’ factor. Subsequent up-selling on that original platform has created a momentum of expectation and desire in the technology consumer space, by constantly mapping the ‘how’ factor to the ‘what’ factor, eclipsing the emergence of a more commoditised alternative gaining a foothold. This is a highly effective point of attack when coupled with a reminder of achieving ROI on the last n-years of similar investment right ?!

The web, by contrast has simplifed and commoditised the same kind of end product, and evolved a user community purely focused on the ‘what’, without a care for the ‘how’ factor. As such it is relatively simple to decouple a user of a web-application from any underlying technical infrastructure, so long as the QoS and the ‘what’ factor is maintainted. Would I even know if my Thunderbird/Outlook mail client began using a competely different store-n-forward protocol? I don’t believe I would.

I believe we need to bring this learning into the enterprise space, and detatch our ‘how’ users from the underlying detail and coaching them to become ‘what’ users, such that we in the integration layer can get to work commoditising the technical fabric in such a way that we do gain the necessary ‘specialisation’ from key vendors, but in the main, we regain control/choice of what it takes to actually pass an ‘xml document’ from source to destination, across a trusted network we control, and guarantee it gets there in one piece.

We seem to have missed this…as it doesn’t cost me six 0’s to hook into, and run an effective real-time business over the web now does it?

Powered by Qumana

Bring Back Tight Coupling and P2P Integration !?

March 18, 2008So we aspire to loose-coupling, re-usable interfaces model abstraction as a means of implementing our SOA. Why? Well we’re told that the alternative is bad ! That alternative is unconstrained tactical wiring between applications, with the resulting unsustainable wiring being the essence of bad practice. I do agree to a point about the unconstrained integration being a bad thing, but there’s also some marketing greyness I need to dispel.

Point-to-Point or tactical integration is the term used to describe the creation of an application integration solution between 2 components, where the aspiration, the design, and the solution is only concerned with that specific requirement at that point in time. Shock horror – who would do such a thing?! Well there’s plenty of reasons for why this kind of approach may be suitable in some scenarios – in fact this IS the most popular approach to integration right!

However the subtle difference between the archetypal P2P interface and a reusable service is in how the design is approached – bear in mind P2P interactions still exist via re-usable services too. Has the interface been based on open standards in the infrastructure layer, has the interface been abstracted at a functional and information level to support additional dimensions (i.e. products, customer types etc) over time? Whether we use web-service technology or not we can still create re-usable services in the application integration landscape.

Now at the other end of the food chain we have our new-friend the coarse-grained, heavily abstracted, reusable Business Services driven out of the mainstream SOA approach to rewiring the Enterprise. Here we have, from the outside looking in, a single exposure for a complex array of related functions (i.e. multi dimensional product ordering), based on WSDL/SOAP/XSD/XML/WS* standards. This kind of approach is the current fashion, and is purported to simplify integration. Wrong!

What we do find is that the new, extensible interface simply creates a thin but strategic veil over the previous P2P interfaces, and effectively causes 2 areas of complex integration. Firstly – behind the new exposure, the service provider has to manage the mediation of an inbound request across his underlying domain models. Secondly the consumers of this newly published service have to deal with their own client-side mediation to enable their localised dialects to be transformed into a shape which can traverse the wire and be accepted by the remote service provider – or at least by the new strategic facade.

My point here is that SOA and the inherent style of wrapping functionality introduce integration challenges in their own right! So it’s not all rosy in the SOA garden, and this is where I’m seeing opportunity for a hybrid approach….and (appologies for the heresy, I’ll burn in hell if I’m wrong) a resurgence of P2P runtime integration based around a well managed reusable service design process.

Eh!? Have I unwittingly turned to the dark-side?

What I mean is P2P is OK if the cost of change is minimal – and if we minimise the client specific aspect we can reduce this cost to a point where it’s comparable to that of the alternative of exposing the common model to the wire. In traditional approaches, cost of change is high because the entire design of the solution was hardwired to one specific purpose. Introduce a requirement to flex that solution and we have to rip and replace. However if the P2P ‘design’ is managed correctly and involves the creation of mappings between a common model and the provider domain models, then in addition to exposing that generic interface to the wire, we have a facility to enable the consumers of the service to declaratively derive their own native transformations, which can cut out a transformation step in the runtime.

If we use a toolset such as Progress DXSI, for capturing the Service Provider mappings into the Common model, and then capturing the Consumer mappings into the Common model, then we can relatively simply derive transformations between the Consumer dialect and the Provider dialect. Any changes to the provider, or the Common model would simply require a re-generation of the transformation code that would then execute on the client. This sounds sort of logical…unless my logic has become skewed somehow 🙂

So this hybrid approach simply blends the best of a fully decoupled SOA approach with the runtime efficiencies of a tightly coupled P2P approach, based on the fact that the design-framework is declarative and can reduce the cost of change so as to mitigate the risk of P2P solutions.

I’m going to explore this in more detail, but I’m confident there’s a way of getting the best from both worlds…unless the SOA police catch me first…

Posted by Stew Welbourne

Posted by Stew Welbourne